Ph.D. Progress Report

Designing for

the Unspoken

Tacit Knowledge Transfer in

High-Stress Environments

Iren Irbe

November 2025

Technical Implementation

Voice-Controlled Auditory Learning Assistant Based on GraphRAG

(Graph Retrieval-Augmented Generation)

Problem 1: Tacit Knowledge Is Difficult to Capture and Transfer

During onboarding and offboarding, organizations struggle to retain and pass on employees’ unspoken, experience-based knowledge because it is rarely written down and hard to share.

Research Aim

To develop and evaluate a theoretical and technological approach for capturing, externalizing, and transferring tacit knowledge in high-stress public-sector environments.

The research investigates how a voice-controlled, GraphRAG-powered assistant can help preserve and reuse this hidden expertise through natural interaction and contextual learning support.

Tacit Knowledge

Michael Polanyi (1966) defined tacit knowledge as understanding that is "inherently difficult to articulate," summarized in his aphorism:

"We can know more than we can tell."

It encompasses know-how, mental models, and intuitions gained through action and experience.

The Transfer Challenge

Von Hippel (1994) coined the term "sticky information" to describe knowledge that is costly to transfer because it is context-dependent.

Tacit knowledge demonstrates this stickiness: its transfer relies on social interaction, observation, and shared experience rather than documentation.

Problem 2: Operational Pressures Prevent Tacit Knowledge Transfer

Data volume has overwhelmed traditional mentorship models.

The Big Data Reality

Agencies process over 10,000 hours of video every day.

80% of evidence is unstructured data (Europol, 2024).

The Human Consequence

Senior analysts spend 90% of their time on routine data processing.

The "Master-Apprentice" model requires time that no longer exists.

The Cognitive Bottleneck

Analysts’ valuable intuition gets buried under routine data work. Without AI to handle the repetitive tasks, there’s no time left for the human interaction needed to transfer tacit knowledge.

Situated Learning

Brown, Collins & Duguid (1989) established that knowledge is inseparable from the activity, context, and culture in which it develops.

The "Master-Apprentice" model depends on legitimate peripheral participation: novices learn by working alongside experts in authentic contexts.

Information Overload

Miller (1956) established that human working memory can hold approximately 7±2 items simultaneously.

When data volume exceeds this capacity, processing degrades. Modern intelligence environments routinely exceed these limits, creating the "cognitive bottleneck."

Presenter Notes

- Start with the sheer volume: 10k hours/day is unmanageable manually.

- Highlight the 10x growth in case data size (100GB to 1TB).

- Connect this to the "death of mentorship": seniors are too busy processing data to teach juniors.

- Use Miller's law to explain why this breaks the human brain (cognitive overload).

- Conclude with the bottleneck: AI is needed not just for speed, but to free up human capacity for tacit transfer.

Problem 3: Institutional Knowledge Disappears Faster Than It Can Be Replaced

The "Silver Tsunami" creates a knowledge vacuum that money cannot fill.

The Experience Gap

It takes 5–7 years of field experience to rebuild operational competence.

The Tangible Cost

6–8× Annual Salary

Direct training, recruitment, reduced productivity during ramp-up, supervision burden, and error costs.

The Intangible Cost

"Deep Smarts"

Unwritten heuristics, informant networks, and pattern recognition that are never documented in SOPs.

Why "Writing It Down" Is Insufficient

The Curse of Knowledge (Hinds, 1999): Experts systematically underestimate how difficult tasks are for novices because they cannot "un-know" what they have learned. They often fail to articulate the critical "why" behind their decisions because it feels obvious to them.

Deep Smarts (Leonard, 2005)

Expert intuition built through years of experience: pattern recognition, judgment under uncertainty, and contextual awareness.

Unlike explicit knowledge in manuals, deep smarts are transferred through guided experience and Socratic dialogue.

Inheritance Imperative

Hang & Zhang (2024) argue that when experts retire, organizations face a "knowledge inheritance crisis."

Departing expert knowledge is organizational inheritance that must be actively claimed through structured protocols.

Recognition-Primed Decision

Klein (1993) showed that experts do not deliberate options; they recognize patterns.

TacitFlow preserves these pattern libraries by capturing the cues experts notice.

Workforce Crisis

- OECD (2025): 40% of civil servants report burnout; 13% intend to leave within 12 months.

- Europol (2025): Urgent need for "state-of-the-art analytical competence" to address capability gaps.

Presenter Notes

- Hook: The "Silver Tsunami" isn't just about empty desks; it's about empty minds.

- Visual: Point to the gap. 20 years vs 2 months. That gap is where mistakes happen.

- Theory: Briefly mention "Deep Smarts" - it's not magic, it's pattern recognition (Klein).

- Problem: Explain "Curse of Knowledge" - experts can't teach this easily because they've forgotten what it's like not to know.

- Solution Tease: A system is needed that extracts this "obvious" knowledge before they leave.

Problem 4: Existing AI Cannot Be Used to Solve These Challenges

Security and reliability constraints rule out commercial solutions.

Why not just use ChatGPT? Because intelligence work operates under constraints that commercial AI cannot meet.

1. Sovereignty & Secrecy

Commercial clouds (e.g., OpenAI, Azure) are legally incompatible with Classified/RESTRICTED data due to jurisdiction risks (CLOUD Act). Data cannot leave the building.

2. The Hallucination Risk

Generative models invent facts. In a legal context, a single hallucination renders evidence inadmissible. The approach prioritizes Groundedness over creativity.

3. The Provenance Gap

"Black box" answers are useless in court. Intelligence requires a Chain of Custody for reasoning. This means knowing exactly which document led to which conclusion.

"The solution requires the power of LLMs without the risk of the Cloud or the unreliability of generation."

LLMs generate plausible-sounding but factually incorrect information because they predict tokens based on statistical patterns rather than verified facts. TacitFlow mitigates this via Retrieval-Augmented Generation (RAG). (Lewis et al., 2020)

The property ensuring AI outputs are anchored in verifiable source material. Unlike creative text generation, grounded reasoning requires every claim to trace back to documented evidence.

W3C PROV-O (2013) provides the standard vocabulary for expressing provenance. TacitFlow uses PROV-O to maintain digital chain-of-custody, tracking who generated information, from what sources, through which processes.

- Address the "Build vs. Buy" question immediately.

- Emphasize that the project isn't building an LLM from scratch (too expensive), but can't use off-the-shelf ChatGPT (too risky).

- The "Hallucination" point is critical for legal admissibility. One error poisons the case.

- Explain the "Black Box" problem: judges need to know why an AI thinks a suspect is guilty.

Research Outputs

A portfolio of theoretical, empirical, and technical contributions.

| Status | Type | Title / Context |

|---|---|---|

| Published | Conf. Paper | "Designing for the Unspoken: A Work-in-Progress on Tacit Knowledge Transfer in High-Stress Public Institutions" (Irbe & Ogunyemi, 2025) |

| Published | Conf. Poster | "Investigating Tacit Knowledge Transfer in Public Sector Workplaces" (Irbe, 2025c) |

| In Review | Journal Article | "Capturing and transferring tacit knowledge: A scoping review" (Irbe, 2025a) |

| In Review | Journal Article | "Workplace Learning article" |

| Ready | Journal Article | "Knowledge sharing in high-stress public sector organizations: An exploratory study" (Target: J. of Knowledge Mgmt) |

| Draft | Theory Report | "From Intuition to Inference: An Approach for Implementing Tacit Knowledge Transfer" |

| Draft | Technical Report | "TacitFlow: A Voice-Controlled AI Assistant for Tacit Knowledge Transfer" |

The research follows a multi-pronged approach: establishing the theoretical gap (Scoping Review), validating the problem (Empirical Study), and proposing the solution (Technical Report).

Targeting high-impact journals in Knowledge Management and AI to bridge the gap between social science theory and technical implementation.

- This slide establishes academic rigor.

- Highlight the progression: Scoping Review -> Empirical Study -> Technical Implementation.

- Mention that the "In Review" articles are the core theoretical contributions.

- The "Draft" reports are the basis for this presentation and the prototype.

Research Questions

Three questions guide this doctoral research, each addressed through distinct methodological approaches.

What current methods are used to capture and transfer tacit knowledge?

Method: Scoping Review

What are the primary barriers and enablers that affect tacit knowledge sharing practices?

Method: Interview Study

What are the current research trends and gaps in the field of tacit knowledge management?

Method: Scoping Review

How can AI-mediated systems support tacit knowledge transfer in high-stress public sector environments?

Method: Software as Hypothesis

Emerging / Ill-defined* This question is intentionally open-ended. It will be refined based on the pilot validation results.

This study employs Design-Based Research (DBR), iterating between theory and practice to develop a working artifact. DBR bridges the gap between laboratory findings and real-world practice. (Wang & Hannafin, 2005)

RQ4 is an "emergent" question. In DBR, the intervention itself (TacitFlow) acts as a hypothesis. The study tests if the existence of such a tool changes the nature of the problem.

- RQ1 & RQ3 are theoretical foundations (the "What" and "Where").

- RQ2 is the empirical validation (the "Why not").

- RQ4 is the constructive contribution (the "How").

- Emphasize that RQ4 is not just about building software, but using software to probe the theoretical limits of tacit knowledge transfer.

Research Flow

From literature to prototype: a Design-Based Research trajectory.

Scoping Review

Analyzed 55 papers on tacit knowledge transfer and workplace learning to establish the theoretical baseline.

Interviews & Analysis

Conducted semi-structured interviews with 10 experts from high-stress public institutions to validate the problem space.

Key Findings

Identified that informal sharing is critical but fragile, often lost due to turnover and lack of structured capture mechanisms.

Early Prototype (TacitFlow)

Developed a voice-based AI assistant as a "Research Through Design" artifact to probe the feasibility of capture.

Next Steps

Co-design, evaluation, and testing through participatory design sessions with end-users.

Artifacts embody theoretical propositions. The prototype becomes a vehicle for testing ideas rather than merely an end product. (Zimmerman, Forlizzi & Evenson, 2007)

Building systems specifically to validate theoretical constructs. TacitFlow is not a commercial product but a research instrument for testing the EASCI framework. (Leinonen et al., 2008)

- Walk through the timeline chronologically.

- Highlight that the project is currently at Step 4 (Prototype).

- Use the side notes to defend the "building software" part of a PhD. It's a methodological choice, not just engineering.

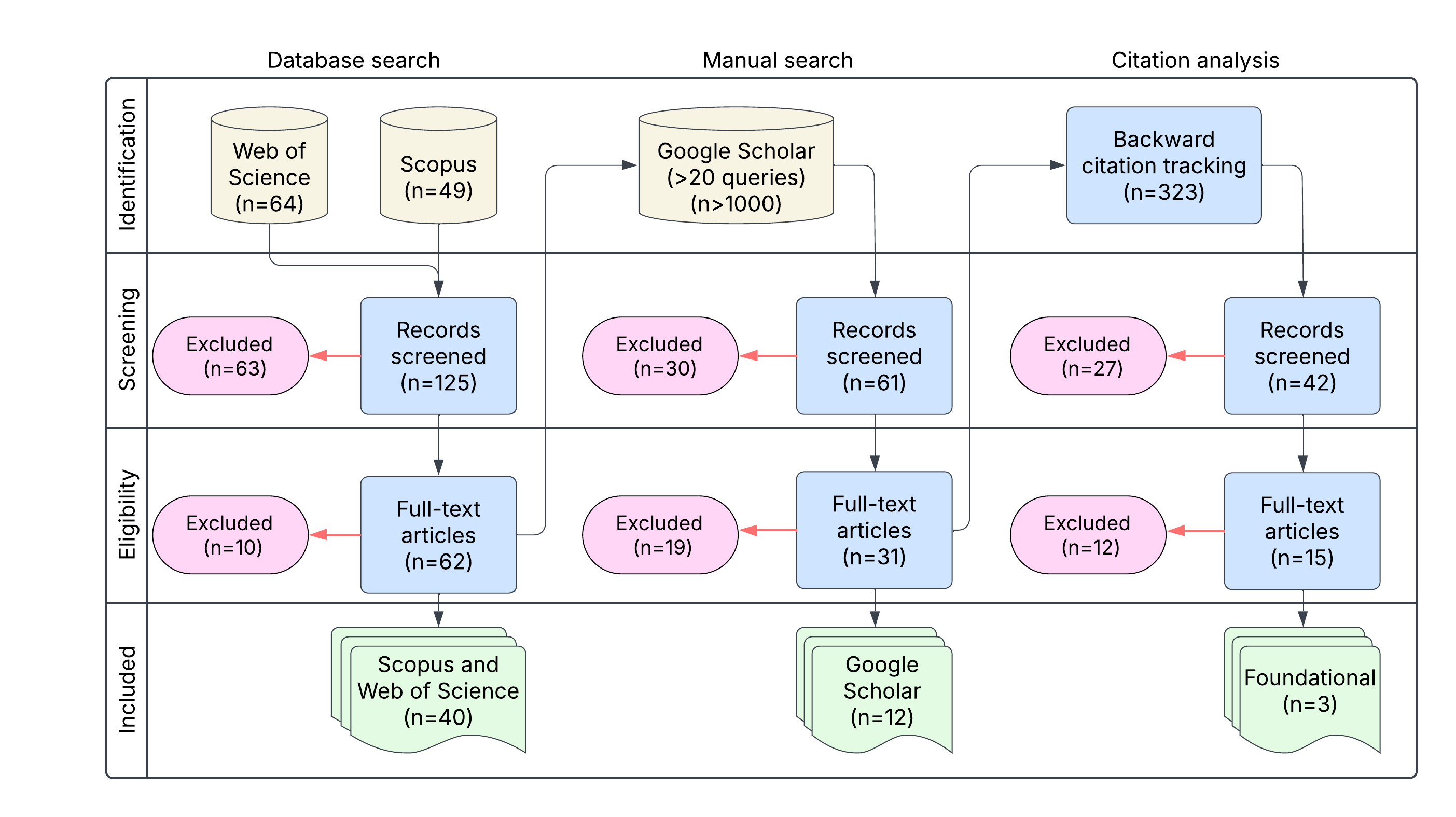

Scoping Review

Understanding how tacit knowledge is captured and transferred in organizations

This review examined methods, barriers, and enablers for organizational experience-sharing, with particular attention to transitions (onboarding/offboarding) in complex institutional settings.

| Parameter | Description |

|---|---|

| Corpus | 55 peer-reviewed studies + monographs (2019–2024) |

| Framework | Arksey & O'Malley (2005); PRISMA-ScR |

| Databases | Scopus, Web of Science, Google Scholar |

| Keywords | tacit knowledge · onboarding · offboarding · informal learning · public sector |

| Exclusion | Formal training & general KM systems only |

Methodology

Arksey & O'Malley (2005) established the framework for scoping reviews, distinguishing them from systematic reviews by their broader exploratory purpose. Scoping reviews map key concepts, evidence types, and research gaps rather than synthesizing effect sizes.

PRISMA-ScR

Tricco et al. (2018) extended PRISMA reporting guidelines specifically for scoping reviews, adding items on rationale for review type selection and deviation from protocol. This enhances transparency and reproducibility in exploratory evidence synthesis.

Presenter Notes

- Emphasize the rigor: 55 studies selected from a much larger pool.

- Highlight the specific focus on "transitions" (onboarding/offboarding) as critical moments for tacit knowledge loss.

- Explain the exclusion of "formal training" - the focus is on the unspoken and informal aspects of knowledge transfer.

- The PRISMA flow diagram (right) visually demonstrates the filtering process.

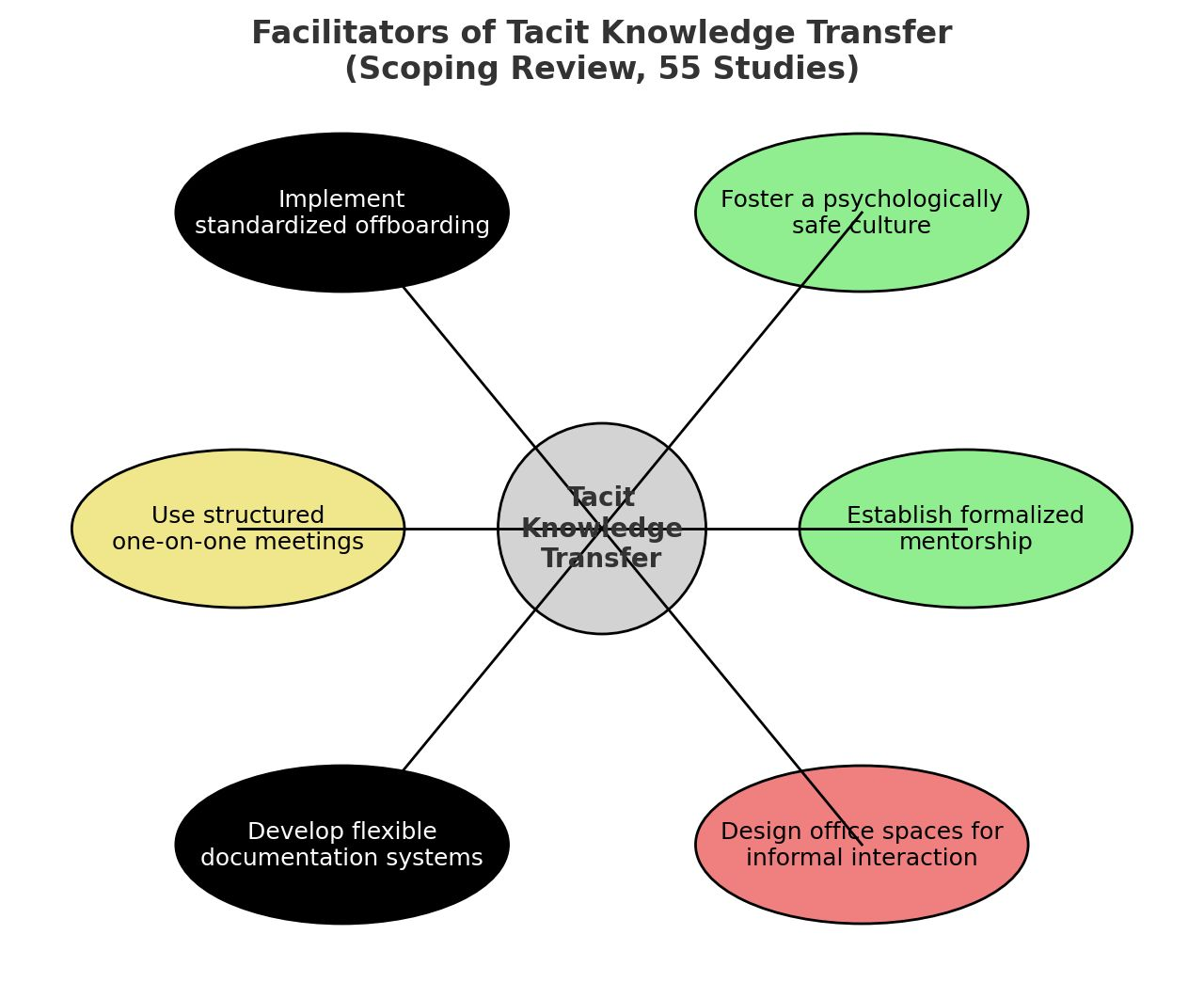

Key Findings from Scoping Review

Barriers, Enablers, and the Human-Centric Reality

Tacit knowledge transfer remains a deeply human-centered process. The most significant barriers are organizational, not technical. A gap exists at the "Externalization" stage; technology plays only a supplementary role.

Barriers vs. Enablers

Barriers

- •Lack of trust between colleagues

- •Limited time for knowledge sharing

- •Weak knowledge-sharing culture

- •No clear structure for transfer

Enablers

- •Strong interpersonal relationships

- •Open organizational culture

- •Supportive leadership

- •Psychological safety

The Externalization Gap

The SECI model assumes externalization is straightforward. The review found the opposite: converting tacit knowledge to explicit form is the primary bottleneck in knowledge transfer.

Key Insight

A gap exists between how people share knowledge in practice (socially) and how technology supports it (structurally).

Detailed Findings

Most workplaces rely on mentoring, storytelling, and job shadowing. AI or digital tools for experience sharing remain rare. While socialization methods are common, they rarely succeed in making tacit knowledge explicit.

Presenter Notes

- Discuss the "Externalization Gap" - this is where TacitFlow aims to intervene.

- Note the color coding: Green (Social) factors are enablers, while Gray/Yellow (Org/Structural) are often barriers.

- The diagram (Figure 3) synthesizes these factors into a holistic view of the transfer environment.

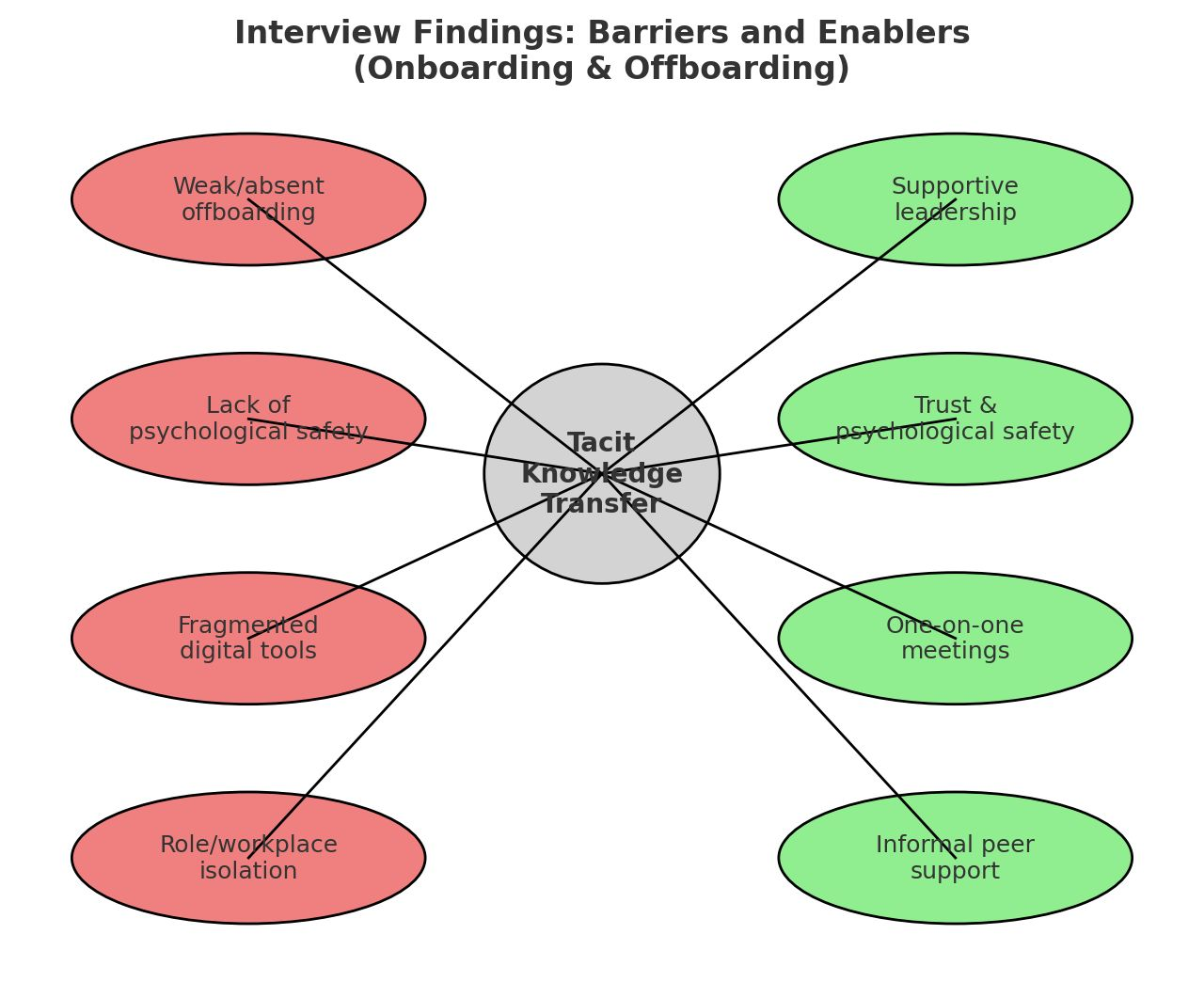

Interview-Based Empirical Study

Knowledge sharing in high-stress public sector organizations

| Dimension | Details |

|---|---|

| Goal | Explore real onboarding/offboarding practices in high-stress public institutions. |

| Participants | 10 professionals (6 managers, 4 specialists) across 6 Estonian organizations. |

| Approach | Semi-structured interviews (45-90 min) + Thematic Analysis. |

| Coding | Hybrid deductive-inductive approach (Braun & Clarke, 2006). |

"Knowledge transfer in organizations usually relies on informal and unstructured practices, such as spontaneous (ad hoc) mentoring or casual peer-to-peer storytelling."

Key Empirical Finding

Organizations rely heavily on informal practices because formal systems fail to capture experience-based judgment. Barriers include lack of psychological safety, isolated workspaces, outdated documentation, and missing offboarding procedures. These are precisely the gaps EASCI addresses.

Thematic Analysis

Braun & Clarke (2006) established thematic analysis as a qualitative method. Their six-phase process (familiarization, coding, theme generation, review, definition, write-up) enables systematic pattern identification while remaining accessible. Unlike grounded theory, it permits both inductive and deductive coding.

From Interviews to EASCI

This study revealed that tacit-to-explicit conversion does not happen in one leap (as SECI suggests). Interview data showed knowledge transfer requires multiple deliberate stages: initial recognition of experience, tentative articulation, structured formalization, social validation, and adaptive refinement.

Participating Organizations

- EASS: Estonian Academy of Security Sciences

- KRA: Defence Resources Agency

- MoD: Ministry of Defence

- PBGB: Police & Border Guard Board

- RIA: Information System Authority

- SMIT: IT & Development Centre

Presenter Notes

- Interviews were conducted with 10 professionals from high-stress environments (Police, Defense, IT).

- Used Braun & Clarke's thematic analysis to identify patterns.

- Crucial Insight: The "leap" from tacit to explicit is too big for a single step. It requires a staged approach.

- This empirical evidence directly informed the 5-stage EASCI framework discussed next.

Interview Findings

Tacit knowledge in Estonian public sector organizations

When experienced personnel depart, they take "sticky" tacit knowledge with them. This knowledge is embedded in intuition, informal routines, and "gut feelings" rarely captured in formal manuals.

Key Observations

- Informal Sharing Relies on mentoring, teamwork, and social interaction.

- Weak Capture Experience stays in heads; systematic capture is rare.

- Barriers Stress, time pressure, lack of structure & safe spaces.

- Enablers Trust, open culture, and psychological safety.

- Offboarding Often unplanned, unstructured, and rushed.

"Sticky" Tacit Knowledge

Von Hippel (1994) coined "sticky information" to describe knowledge costly to transfer. Tacit knowledge is deeply embedded in context, making it resistant to codification.

Current Mechanisms

Transfer relies on ad-hoc mentoring ("watch me do this"). This breaks down during rapid turnover or when key personnel leave unexpectedly.

Practitioner Interest

Participants expressed strong preference for tools making sharing easier. Voice-based AI assistants resonated well for quick, natural capture.

Presenter Notes

- Define "Sticky Knowledge" - it costs money and time to move it.

- Point out the red bars in the list: Barriers and Offboarding are major pain points.

- The diagram shows the environment where this happens.

- Mention the "Voice AI" finding - this validates the technical approach later.

The Externalization Gap

Where knowledge transfer fails in practice

Core Finding: Organizations succeed at Socialization (sharing experience) but fail at Externalization (converting experience into reusable knowledge).

Evidence: Scoping Review

Of 55 studies analyzed, most focus on socialization methods. Few address how technology can support externalization.

Gap: Theoretical & Technological

Evidence: Field Interviews

Across 10 practitioners, strong informal sharing cultures exist, but knowledge vanishes when experts depart.

Gap: Practical & Organizational

Research Direction: Dual Gap Convergence

The theoretical gap (missing models) and practical gap (missing processes) converge on a single need: tools that transform spoken experience into preserved, reusable knowledge. This points toward voice-based solutions that align with how experts naturally articulate experience.

SECI Model Context

Nonaka & Takeuchi (1995) describe four knowledge conversion modes. While organizations excel at Socialization (mentoring), they struggle with Externalization (articulating concepts).

Convergent Validity

Literature and field data independently identify the same failure point, strengthening confidence in this finding.

Documented Loss

Cho et al. (2020) documented this in Security Operations Centers: tacit expertise remains "trapped in heads" until personnel depart, then vanishes.

Presenter Notes

- This slide is the "Pivot Point" of the presentation.

- The problem has been identified (Tacit Knowledge Loss) and the specific mechanism of failure (Externalization).

- The visual flow makes it obvious: humans are good at talking (Socialization) but bad at capturing (Externalization).

- This sets up the solution: TacitFlow is an Externalization Engine.

TacitFlow Prototype: Concept and Features

A voice-controlled AI assistant for capturing and retrieving tacit knowledge

Based on interview findings, TacitFlow is being developed as an AI voice assistant that captures and connects everyday experience so it can be reused by others.

Core Functionality

- Capture Speak out short experiences, tips, or lessons.

- Store Save automatically as searchable knowledge.

- Retrieve Get spoken answers, contextual suggestions, or text search results.

Knowledge Object Output

Captured speech becomes structured data:

- Searchable Transcription: Full text index.

- Semantic Tags: Automated categorization.

- Source Attribution: Context & provenance.

- Confidence Scores: AI processing metadata.

Target Use Cases

- 1. Retirement Capture: Preserving senior personnel expertise before they leave.

- 2. Accelerated Onboarding: Reducing the competence-building period for new hires.

- 3. Operational Debriefs: Capturing lessons immediately after incidents.

- 4. Cross-Institutional Sharing: Making tacit knowledge searchable across silos.

- 5. Research Platform: Testing theoretical assumptions about AI mediation.

Why Voice?

- Natural: Experts articulate experience through storytelling.

- Focus: Speaking allows capture without breaking concentration.

- Context: Voice preserves narrative structure and reasoning.

Software as Hypothesis

TacitFlow is a research instrument (Leinonen et al., 2008). It operationalizes concepts from knowledge management theory to allow empirical validation. The study tests: Can AI mediate the tacit-to-explicit conversion?

Workflow Integration

TacitFlow requires no separate login or forms. It integrates into existing work activities, capturing knowledge through passive listening or brief voice prompts.

Presenter Notes

- Introduce TacitFlow as the direct response to the "Externalization Gap".

- Highlight the "Voice First" approach - it's not just a feature, it's the core design philosophy to lower friction.

- Walk through the 3 steps: Capture, Store, Retrieve.

- Emphasize that this is "Software as Hypothesis" - it was built to test the theory.

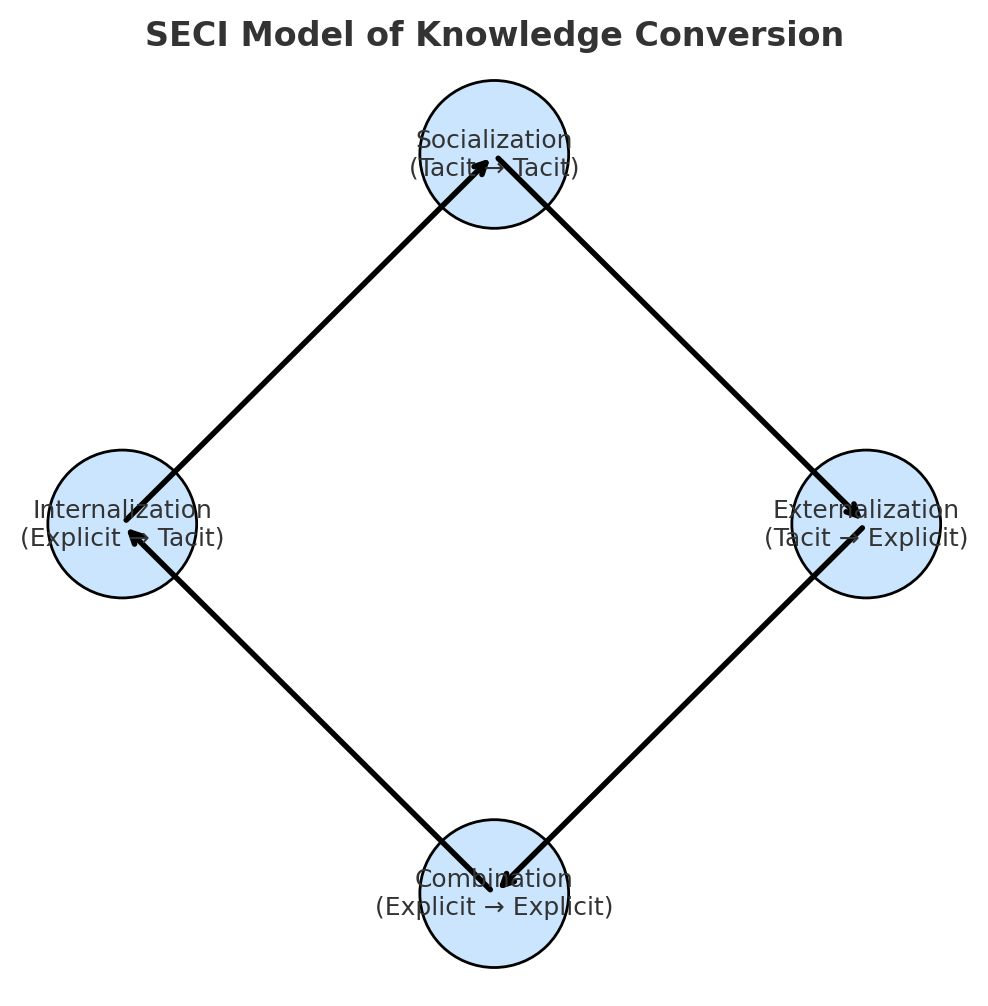

SECI Model

The dominant theoretical framework for organizational knowledge creation

Before examining why the Externalization Gap exists, one must understand the theoretical framework that defines it. Nonaka & Takeuchi (1995) proposed the SECI model as a continuous spiral of knowledge conversion between tacit and explicit forms.

The Four Conversion Modes

- Socialization

- Tacit → Tacit Sharing through experience: apprenticeship, mentoring, shadowing.

- Externalization

- Tacit → Explicit Articulating concepts into shareable form. The critical bottleneck.

- Combination

- Explicit → Explicit Systemizing knowledge into documents, databases, procedures.

- Internalization

- Explicit → Tacit Learning by doing; explicit knowledge becomes embodied skill.

Research Starting Point

The research started with the SECI model, but its main weakness quickly became clear in high-stress environments: it assumes that Externalization will happen if people simply try hard enough.

Why SECI?

Nonaka and Takeuchi's The Knowledge-Creating Company (1995) is among the most cited works in management science. SECI has shaped knowledge management practice for three decades.

The Spiral Concept

SECI operates as a continuous spiral rather than a linear sequence. Knowledge cycles through all four modes repeatedly.

Academic Critiques

- Gourlay (2006): Externalization lacks operational definition. It's a metaphor without a mechanism.

- Tsoukas (2003): Tacit knowledge cannot be fully "converted". It remains context-dependent.

- Snowden (2002): SECI oversimplifies context, assuming universal applicability.

Presenter Notes

- Briefly explain the 4 quadrants. Don't get bogged down in theory, but establish the vocabulary.

- Focus on the Red Quadrant (Externalization): This is where the magic is supposed to happen, but often doesn't.

- Mention the critiques: The project isn't the first to say SECI is imperfect, but it proposes a specific fix for the Externalization step.

The Limits of SECI in High-Stress Environments

Why the "Knowledge Spiral" Breaks Down Under Pressure

SECI assumes Externalization occurs naturally given sufficient motivation. It describes that conversion happens, not how. This lack of operational mechanism causes failure in high-stress contexts.

The Core Problem

- Operational Gap SECI describes process, not mechanism. It offers a metaphor without a method for extraction.

- High-Stress Failure Standard interventions (e.g., "lessons learned") fail when investigators cannot articulate "gut feel" pattern matching.

Why SECI Fails for AI

- Context Blindness SECI relies on shared physical space (Ba). AI lacks this "situated cognition."

- The Articulation Gap Metaphor is insufficient for code. AI needs structured "Intuition Pumps" to elicit reasoning.

- Missing Verification SECI relies on social consensus. AI needs explicit "Quality Gates" to prevent hallucination.

EASCI: Operationalizing SECI

Where SECI offers a metaphor, EASCI supplies the engineering: specific protocols for externalization, quality gates for verification, and AI mediation for structure.

"We know more than we can tell."

— Michael Polanyi (1966)

Collins' Taxonomy (2010)

- Relational: Concealed by social dynamics.

- Somatic: Physically embodied skill.

- Collective: Distributed across a team.

Theoretical Limits

- Gourlay (2006): "Externalization" lacks operational definition.

- Tsoukas (2003): Context is inseparable from tacit knowledge.

- Von Hippel (1994): "Sticky information" resists transfer.

Presenter Notes

- Start with the Polanyi quote: it's the fundamental truth of this domain.

- Explain that SECI works fine for "business as usual" but breaks when things get chaotic.

- Key Point: AI cannot just "absorb" culture like a human apprentice. It needs explicit structures.

- Introduce EASCI as the "engineering fix" to SECI's "theoretical bug."

SECI Model: The Externalization Gap

Why SECI proved insufficient as a blueprint for system design

Scoping Review, Interviews, and Theoretical Analysis showed the same problem: SECI helps people build trust, but it does not help them express complex, experience-based knowledge.

Theoretical Failures

- Articulation Barrier Tacit knowledge is hard to explain. "We know more than we can tell."

- Mechanism Gap "Externalization" is a metaphor, not a process. No operational definition exists.

- Missing Cognition SECI ignores inference. Abduction and intuition pumps are required.

Example: Intuition Pump

"Imagine a new colleague is about to handle their first high-pressure incident alone. What's the one thing you'd want them to know that isn't in any manual?"

This bypasses the "what do you know?" block by creating a concrete, protective scenario.

"SECI was not enough for building a real system because it doesn’t explain how tacit knowledge becomes explicit. It describes the idea but not the mechanism."

Abductive Reasoning

Peirce (1903): The process of forming explanatory hypotheses. Unlike deduction (proving) or induction (generalizing), abduction infers the best explanation for a specific situation.

Intuition Pumps

Dennett (2013): Thought experiments designed to elicit intuitions. They bypass the Polanyi Paradox by asking "what would you do if...?" rather than "what do you know?".

Key References

- Polanyi (1966): The Tacit Dimension.

- Gourlay (2006): "Conceptualizing Knowledge Creation."

- Busch (2008): "Tacit Knowledge in Organizational Learning."

Presenter Notes

- Walk through the flow diagram: Green works, Red is broken.

- Explain why it's broken: The "Mechanism Gap."

- Use the "Intuition Pump" example to show how the system fixes it. Instead of asking "tell me what you know," the system asks "what would you tell a rookie?"

- This shift from direct questioning to scenario-based elicitation is the core of the approach.

The Role of AI in Tacit Knowledge Capture

Can a voice-controlled agent help externalize tacit knowledge?

The Externalization Problem

Tacit knowledge externalization fails because experts cannot easily verbalize what they know. Polanyi (1966) describes this as "we know more than we can tell." This is compounded by the Curse of Knowledge (Hinds, 1999): experts struggle to imagine what novices don't know, making direct transfer ineffective. Interview findings confirm that experts struggle to articulate reasoning under time pressure.

Socratic Mediation

TacitFlow does not replace human reasoning; it acts as a Socratic interviewer that prompts reflection and forces articulation (Dennett, 2013: intuition pumps; Dewey, 1938: inquiry as reflective practice). This differs from retrieval-augmented generation: the AI elicits rather than merely retrieves.

Why Not Traditional KM?

Traditional KM tools cannot support abductive or narrative reasoning (Gourlay, 2006; Collins, 2010). AI agents can observe interaction patterns, ask clarifying questions, and assist in forming structured Knowledge Objects, shifting from passive storage to active mediation.

Research Through Design

The methodological foundation is Research Through Design (Zimmerman, Forlizzi & Evenson, 2007), where the system is built to test theoretical assumptions. Leinonen et al. (2008) describe this as "Software as Hypothesis": a prototype used to validate theoretical constructs, not a commercial product.

This theoretical position creates the bridge to the EASCI framework.

The Socratic Method

Named after Socrates (470-399 BCE), the method uses guided questioning rather than direct instruction. The teacher asks probing questions that expose contradictions, forcing the learner to refine their understanding. TacitFlow's AI probes follow this tradition: asking questions that help experts articulate what they know.

Iterative Refinement

DBR's iterative cycles distinguish it from traditional experiments. Each TacitFlow iteration generates usage data that informs the next design phase, embodying DBR's commitment to theory-practice integration.

Cognitive Load Theory

Sweller (1988): Working memory has strict limits. TacitFlow's voice interface minimizes extraneous load (typing/navigating) to preserve germane load (reflection/articulation).

Recognition-Primed Decision

Klein (1993): Experienced practitioners recognize patterns rather than deliberating options. TacitFlow preserves these pattern libraries by capturing the cues experts notice.

Complex Thinking

Morin (2008): Complex phenomena require multiple perspectives. TacitFlow synthesizes pragmatism, process philosophy, organizational learning, and cognitive science.

Deep Smarts

Leonard (2005): Expert intuition built through years of experience. Unlike explicit knowledge in manuals, deep smarts are transferred through guided experience and Socratic dialogue.

Psychological Safety

Edmondson (1999): Essential for sharing. Experts must feel safe revealing their "tricks" without fear of judgment. TacitFlow addresses this through anonymous draft submission and mentor-mediated validation.

Mental Models

Internal representations (e.g., "suspicious behavior"). TacitFlow captures mental models through structured articulation and makes them visible for peer validation.

Avoiding Competency Traps

March (1991): Organizations that over-exploit existing knowledge fall into "competency traps." EASCI balances exploitation (Consolidation) with exploration (Innovation).

Process Philosophy

Whitehead: Actual Entities are "drops of experience." Concrescence is the integration of data into a new unity. In TacitFlow, each Knowledge Object is a concrescence of prior data, perspective, and AI structuring.

Collins' Taxonomy (2010)

Relational: Concealed by social dynamics.

Somatic: Embodied.

Collective: Social.

TacitFlow targets relational and somatic knowledge through conversational elicitation.

Experiential Learning

Kolb (1984): Concrete Experience → Reflective Observation → Abstract Conceptualization → Active Experimentation. EASCI maps directly onto this cycle.

Miller's Law (7±2)

Working memory holds ~7 chunks. EASCI's structured stages respect this limit by processing knowledge incrementally.

Curse of Expertise

Hinds (1999): Experts cannot "unlearn" expertise to imagine a beginner's perspective. TacitFlow's AI probes address this by asking the questions novices would ask.

Presenter Notes

- The "Why": AI is used not just to record, but to provoke.

- The "How": Socratic questioning. The AI plays the role of the "naive learner" to force the expert to explain things clearly.

- Theoretical Basis: This isn't just a tech demo; it's "Research Through Design." It was built to test if this kind of capture is even possible.

- Highlight Psychological Safety: If cops/firefighters don't feel safe, they won't talk. The system is designed for that.

A Proposed Alternative to SECI: The EASCI Framework

How TacitFlow operationalizes SECI, Pragmatism, and Process Philosophy

EASCI addresses the Externalization Gap identified in SECI. Where SECI assumes tacit-to-explicit conversion happens spontaneously, EASCI decomposes it into discrete, operationalizable stages with defined inputs, outputs, and validation criteria.

| Stage | Name | Function | Theoretical Basis |

|---|---|---|---|

| E | Experience | Context-embedded apprenticeship; learning occurs in the flow of work | Dewey, 1938; Brown et al., 1989 |

| A | Articulation | Intuition pumps & guided explanation; eliciting tacit narratives | Dennett, 2013; Polanyi, 1966 |

| S | Structuring | Abductive mapping into formal Knowledge Objects with provenance | Peirce, 1903; Simon, 1996 |

| C | Consolidation | Social verification & sensemaking before entering the canon | Weick, 1995; Walsh & Ungson, 1991 |

| I | Innovation | Smart Forgetting & knowledge evolution; pruning outdated KOs | Whitehead, 1929; Kluge & Gronau, 2018 |

Why EASCI?

SECI says tacit knowledge can be made explicit but doesn't explain the process, skipping the "how". EASCI splits this into two actions: first explaining the reasoning (Articulation), then organizing it (Structuring). This gives us a practical way to build AI that helps with each part.

Stage Mechanisms

- Experience: (Dewey) grounds knowledge in action: learning by doing, not isolation.

- Articulation: (Dennett, Polanyi) treats explanation as an elicited process requiring guided prompts.

- Structuring: (Peirce) formalizes tacit insights through abductive reasoning.

- Consolidation: (Weick) requires community sensemaking before knowledge enters the canon.

- Innovation: (Whitehead) ensures knowledge remains dynamic, allowing pruning and refinement.

Theoretical Backbone

This framework is the theoretical backbone that TacitFlow operationalizes. Each stage maps to specific system components and AI agents in the architecture.

Sensemaking (Weick, 1995)

Sensemaking is the retrospective process through which people construct "plausible accounts" of ambiguous situations. Unlike rational decision-making models, sensemaking acknowledges that meaning emerges through social interaction and narrative construction. TacitFlow's Consolidation stage operationalizes this by requiring peer dialogue before knowledge enters the canon.

Field Example

Time>02:00 + Unlocked = RiskDavid Kolb's Experiential Learning

David Kolb (1939-present) developed the Experiential Learning Theory (ELT) synthesizing work by Dewey, Lewin, and Piaget. His four-stage cycle shows learning as a spiral: (1) Concrete Experience (doing), (2) Reflective Observation (watching and reflecting), (3) Abstract Conceptualization (thinking and concluding), (4) Active Experimentation (planning and trying). Each stage requires different abilities. EASCI's five stages map directly onto Kolb's cycle with Consolidation added for social validation.

Dave Snowden's Cynefin Framework

Dave Snowden developed the Cynefin (Welsh: "habitat") framework distinguishing five domains: Simple (clear cause-effect; best practice), Complicated (expertise needed; good practice), Complex (emergent; probe-sense-respond), Chaotic (no patterns; act-sense-respond), and Disorder (unknown domain). Snowden critiqued SECI for treating all knowledge as if it existed in the "Complicated" domain. TacitFlow is designed for the Complex domain where knowledge emerges through interaction rather than transfer.

Presenter Notes

- EASCI vs SECI: Emphasize that SECI is a "what" model, EASCI is a "how" model.

- The Gap: The jump from Tacit to Explicit is the hardest part. It is split into Articulation (getting it out) and Structuring (making it useful).

- Theoretical Depth: Point out that this is grounded; it's grounded in Dewey, Peirce, Weick, etc.

- Field Example: Walk through the police example to make it concrete.

EASCI Framework: The Knowledge Lifecycle

A dynamic system with recursive feedback paths

Unlike SECI's linear progression, EASCI models knowledge as a dynamic system with multiple feedback paths.

Theoretical Backbone

This framework is the theoretical backbone that TacitFlow operationalizes. Each stage maps to specific system components and AI agents in the architecture.

Why EASCI?

SECI says tacit knowledge can be made explicit but doesn't explain the process, skipping the "how". EASCI splits this into two actions: first explaining the reasoning (Articulation), then organizing it (Structuring). This gives us a practical way to build AI that helps with each part.

Stage Mechanisms

- Experience: (Dewey) grounds knowledge in action: learning by doing, not isolation.

- Articulation: (Dennett, Polanyi) treats explanation as an elicited process requiring guided prompts.

- Structuring: (Peirce) formalizes tacit insights through abductive reasoning.

- Consolidation: (Weick) requires community sensemaking before knowledge enters the canon.

- Innovation: (Whitehead) ensures knowledge remains dynamic, allowing pruning and refinement.

Dynamic vs. Linear

SECI presents a linear spiral: Socialization → Externalization → Combination → Internalization. EASCI models knowledge as a complex adaptive system with multiple feedback loops. Innovation can trigger new Experience; Consolidation can require return to Articulation.

Process Philosophy View

Whitehead's (1929) "creative advance into novelty" reframes knowledge as flux, not static entity. What appear as stable organizations are merely "relatively stable" patterns emerging from underlying processes. EASCI embodies this dynamic view.

Process Philosophy Explained

Process philosophy treats reality as fundamentally composed of events and processes rather than static substances. Whitehead, its primary architect, argued that "actual occasions" of experience are the ultimate constituents of reality. For knowledge management, this means: knowledge is not a thing to be stored but an ongoing process of becoming. Each moment of knowing integrates past experiences into present understanding and propels toward future inquiry.

Pragmatism Explained

Pragmatism (Peirce, James, Dewey) judges ideas by their practical consequences rather than abstract truth. Knowledge is validated through action: "truth is what works." For EASCI, this means: a KO is valid if it enables effective action. The framework doesn't seek abstract truth but practical utility: knowledge that helps practitioners navigate real situations.

Why Five Stages?

Each stage transforms knowledge through specific cognitive and social mechanisms. Unlike SECI's four modes, EASCI separates Articulation from Structuring because they require different AI interventions: dialogue-based elicitation vs. abductive formalization.

Presenter Notes

- The Loop: Focus on the visual. It's not a line, it's a cycle.

- Feedback: Point out the arrows going back. You can go from Innovation back to Experience.

- Philosophy: Briefly mention Whitehead and Pragmatism. The focus is on "what works" (Pragmatism) and "knowledge as a process" (Process Philosophy).

- AI Role: The AI helps move knowledge from one stage to the next.

EASCI Stages 1-3: Capture & Structure

The Capture Phase (E-A-S)

Stages 1-3 form the "capture" phase: grounding knowledge in action, surfacing hidden assumptions, and formalizing insights into verifiable Knowledge Objects.

1. Experience (Dewey, 1938; Brown & Duguid, 1991)

Context-Embedded Apprenticeship. Knowledge is not static data but active inquiry. Learning occurs in the flow of work ("learning by doing"), not in isolation.

2. Articulation (Dennett, 1991)

Intuition Pump Scenarios. Tacit knowledge resists direct questioning. The system uses "intuition pumps": thought experiments and counterfactuals to force the narration of hidden assumptions. This captures the why behind decisions.

3. Structuring (Peirce, 1903)

Abductive Mapping. Moving from "hunches" to hypotheses. Peirce's abduction is used to structure uncertain insights into formal "Knowledge Objects" (KOs) with provenance.

Why Three Stages for Capture?

SECI assumes tacit-to-explicit conversion happens spontaneously. EASCI decomposes this into discrete, operationalizable stages. Experience establishes authentic context. Articulation elicits hidden assumptions. Structuring formalizes hunches.

From Tacit to Explicit: The Gap EASCI Fills

Nonaka's SECI model identifies what needs to happen but not how. The scoping review (n=55 studies) found this "externalization gap" is the primary failure point. EASCI operationalizes the gap through cognitive mechanisms.

Situated Learning

Brown & Duguid (1991) argue that canonical descriptions of work often diverge from actual practice. Real learning happens through legitimate peripheral participation within communities of practice.

Embodied Cognition

Merleau-Ponty (1945) argued knowledge is grounded in bodily experience. TacitFlow's Experience stage captures pre-reflective patterns through behavioral observation.

Maurice Merleau-Ponty (1908-1961)

French phenomenologist who established the body as the primary site of knowing. Against Cartesian dualism, he argued that perception is active embodied engagement. TacitFlow captures this through contextual metadata.

Phenomenology

Study of conscious experience from a first-person perspective (Husserl). It treats subjective experience as valid data, not bias to be eliminated.

Cartesian Dualism

Descartes' separation of mind and body. Merleau-Ponty's critique grounds tacit knowledge theory: knowing involves the whole embodied person.

Abductive Mapping

Peirce distinguished abduction (guessing best explanation) from deduction and induction. Structuring uses abduction to generate hypotheses from sparse evidence.

Exploration vs. Exploitation

March (1991): Organizations must balance efficiency (exploitation) with innovation (exploration). EASCI structures this tension.

Capture Phase in Practice

Example: Investigator notices anomaly.

E: Sensors capture data.

A: AI asks "Why?".

S: KO created: "Rapid access + unusual time = risk".

Communities of Practice

Lave & Wenger (1991): Knowledge transfer occurs through "legitimate peripheral participation". TacitFlow uses mentor-novice pairing.

Grounded Cognition

Barsalou (2008): Conceptual knowledge involves modal simulations. Expert judgment relies on "feeling" if a situation matches prior patterns.

Cognitive Apprenticeship

Collins et al. (1989): Modeling, coaching, scaffolding, and fading. AI probes function as scaffolding that fades as reasoning improves.

Reflective Practice Feedback Loop

Schön (1983): "Reflection-in-action". Innovation reveals gaps, prompting refinement of Articulation methods.

Pattern Recognition Feedback Loop

Simon (1996): Experts recognize patterns. Innovation helps identify recurring structures, improving Structuring.

Legitimate Peripheral Participation

Newcomers learn by participating at the edges of practice. TacitFlow operationalizes this through guided observation.

Bounded Rationality

Simon (1957): Humans use heuristics due to limited processing power. KOs provide pre-organized knowledge to reduce search costs.

Presenter Notes

- Feedback Loops: Emphasize that this isn't a one-way street. Structuring feeds back to Experience.

- Embodied Cognition: This is key. We aren't just capturing words; we're capturing the context of the body in the environment.

- The Gap: Remind them again that SECI fails at the "how". This slide shows the "how".

EASCI Stages 4-5: Consolidate & Evolve

The Validate Phase (C-I)

Stages 4-5 form the "validate" phase: social verification ensures quality, while Smart Forgetting keeps the knowledge base current and relevant.

4. Consolidation (Weick, 1995)

Social Reflection. Individual insights are fragile. They must survive social verification and consensus building to become organizational truth. Mentor verification circles and dissent logging ensure that only validated, agreed-upon facts enter the canon.

5. Innovation (Whitehead, 1929)

Creative Application. The system must evolve. "Smart Forgetting" (Kluge & Gronau, 2018) prunes outdated KOs, ensuring the knowledge base remains a living process. This is the Creative Advance: the settled past becomes the platform for novel future occasions.

Why Validation Requires Two Stages?

Individual insights are fragile and prone to bias. Consolidation implements Weick's sensemaking: social verification ensures insights survive peer scrutiny. Innovation implements Whitehead's process philosophy: knowledge evolves through Creative Advance.

Reflection-in-Action vs. Reflection-on-Action

Schön distinguished two reflective modes: Reflection-in-action occurs during performance (e.g., jazz improvisation). Reflection-on-action occurs afterward. TacitFlow's voice capture supports both: in-the-moment observations and post-event debriefs.

The Compounding Effect

By formalizing the feedback loops (e.g., Double-Loop Learning), the system turns linear investigation steps into a compounding asset. Each case solved makes the next one faster, creating institutional memory that survives personnel turnover.

Smart Forgetting Protocol

Innovation requires unlearning. This protocol ensures outdated intelligence (e.g., disproven hypotheses) is actively pruned to prevent "zombie facts."

Social Verification as Quality Gate

Von Krogh et al. (2000): Knowledge creation requires "enabling conditions" like trust and empathy. TacitFlow's mentor circles operationalize this, surfacing tacit disagreements to prevent hallucination loops.

Double-Loop Learning

Argyris & Schön (1978): Unlike single-loop learning (correcting errors), double-loop learning questions governing assumptions. It supports revising protocols when standard methods fail.

Social Learning Theory

Bandura (1977): Learning occurs through observation and modeling. TacitFlow's mentor circles create observational learning opportunities.

Observational Learning

Learning by watching others. Components: attention, retention, reproduction, motivation. TacitFlow makes validated expert KOs visible to learners.

Validate Phase in Practice

Consolidation: Mentor circle reviews KO. Senior confirms pattern. Junior dissents. Consensus reached → KO enters vector store.

Innovation: New case law changes standards. Smart Forgetting flags KO. Expert reviews and retires it with provenance trail.

Embodied Knowledge Feedback Loop

Merleau-Ponty (1945): Structuring creates cognitive schemas that become the pre-reflective lens for future Experience.

Organizational Memory Feedback Loop

Walsh & Ungson (1991): Consolidated KOs become the institutional vocabulary that shapes how practitioners articulate new experiences.

Double-Loop Learning Feedback Loop

Innovation back to Experience reframes the entire knowledge capture process, creating fundamental shifts in practice.

Stable Tacit Practices

Practices are not static but actively performed. Consolidation creates "relative stability" where knowledge is routinized yet subject to re-enactment.

Destabilization Events

External shocks or anomalies force a shift from unreflective habit to active sensemaking (Dewey's "problematic situation").

Metacognition

Flavell (1979): "Thinking about thinking." TacitFlow's reflective probes develop awareness of one's own cognitive processes.

Lures for Feeling

Whitehead: Validated KOs become "lures" that shape how practitioners perceive future situations.

Resilience Engineering

Hollnagel (2011): Innovation enables adaptive capacity by updating mental models based on accumulated experience.

Presenter Notes

- Social Verification: Emphasize that AI alone cannot validate tacit knowledge; human consensus is required.

- Smart Forgetting: This is crucial for preventing "zombie facts" in the vector store.

- Feedback Loops: Show how Innovation feeds back into the start of the cycle (Experience), creating a continuous learning engine.

Why an Audio-Based Approach for TacitFlow?

Situated Interaction & The Contextual Imperative

The Bandwidth Gap

Explicit Only: Written reports force users to filter out "irrelevant" details, often discarding the tacit context.

Rich Signal: Voice captures how something is said (hesitation, urgency, confidence), which is critical metadata for intelligence.

Situated Interaction

Tacit knowledge rarely appears in formal documents; it emerges through dialogue, mentoring, and situated interaction (Brown, Collins & Duguid, 1989). Audio supports in-the-moment articulation in security, corrections, and investigative contexts where workers cannot type.

HCI Evidence

HCI research shows voice interfaces reduce cognitive burden and improve engagement during reflective tasks (Lai et al., 2022; Kocielnik et al., 2018). Pradhan et al. (2020) demonstrate improved accessibility for users with low technological proficiency.

The "Sticky" Knowledge Problem

Von Hippel (1994) showed that tacit knowledge is inherently "sticky," bound to the context where it was developed. Decontextualized knowledge loses meaning. TacitFlow addresses this by capturing rich contextual metadata: location, time, personnel present, preceding events, emotional tone. Context travels with the knowledge.

Overcoming Sharing Dilemmas

Cabrera & Cabrera (2005) identify knowledge sharing as a social dilemma where collective benefit is high but individual costs (time, status risk) disincentivize contribution. TacitFlow addresses this through:

- Attribution: Visible credit for contributions builds self-efficacy.

- Low Friction: Voice reduces the effort cost of sharing.

- Social Norms: Peer validation celebrates sharing.

Validation Approach

Our research explores this assumption through scoping review, expert interviews, and prototype evaluation. Validation is part of the process, not a precondition.

Self-Determination Theory

Deci & Ryan (2000): Intrinsic motivation depends on autonomy, competence, and relatedness. TacitFlow's voice-first design supports personal agency (choosing what to share) rather than forcing structured input.

Self-Efficacy

Bandura (1977): Belief in one's ability to succeed. TacitFlow builds efficacy through structured success experiences and visible attribution.

Paralinguistic Information

Voice captures hesitation, emphasis, and tone: metadata for tacit judgment. Polanyi (1966) noted experts often "feel" rightness before they can explain it. Audio preserves these signals; text discards them.

Contextual Metadata

Data about data capturing creation circumstances. TacitFlow captures:

- Temporal: Timestamp, duration.

- Spatial: GPS, zone.

- Social: Presence, supervisor.

- Operational: Case #, incident type.

- Physiological: Heart rate (stress).

Situated Interaction

Brown, Collins & Duguid (1989): Knowledge is inseparable from the activity and context in which it develops.

Subtle Cues

Audio captures confidence or uncertainty. Experts often "feel" rightness before they can explain it.

Presenter Notes

- Why Voice? It's not just convenience; it's about capturing the "sticky" context and emotional nuance that text misses.

- Motivation: Explain how we use Self-Determination Theory to make people want to share.

- The Dilemma: Acknowledge that sharing is hard/risky. Show how we lower the cost (voice) and raise the reward (attribution).

How EASCI Informs TacitFlow’s Design?

Operationalizing Epistemology with Provenance

Core Principle: EASCI defines what epistemological transformations must occur for tacit knowledge to become institutional memory. TacitFlow defines how these transformations are captured, structured, and validated in practice, with full provenance traceability.

| Stage | Theoretical Basis | TacitFlow Implementation | PROV-DM Mapping |

|---|---|---|---|

| Experience | Dewey's (1938) experiential inquiry; Brown & Duguid (1991) situated learning | Voice capture in real operational contexts; contextual metadata recorded automatically | prov:Activity (capture) + prov:Agent (practitioner) |

| Articulation | Dennett's (2013) "intuition pumps"; Polanyi's (1966) tacit-to-explicit conversion | AI-guided conversational probes surface implicit reasoning via scenarios | prov:wasGeneratedBy linking speech to articulation |

| Structuring | Peirce's (1903) abductive reasoning; graph-based knowledge representation | GraphRAG + GoT transforms narratives into Knowledge Objects | prov:wasDerivedFrom tracing KO derivation |

| Consolidation | Weick's (1995) organizational sensemaking; social construction of "plausible accounts" | Human-in-the-loop review; peer validation; confidence scoring | prov:wasAttributedTo for reviewer accountability |

| Innovation | Whitehead's (1929) "creative advance"; Kluge & Gronau (2018) on forgetting | "Smart Forgetting" invalidates obsolete KOs; feedback loop creates new context | prov:wasInvalidatedBy for retired knowledge |

Knowledge Objects (KOs)

A Knowledge Object is TacitFlow's atomic unit: a structured claim bundling evidence, confidence score, derivation chain, and contributor attribution. Unlike unstructured notes, KOs are typed entities with formal provenance, traceable from capture to canonization.

PROV-DM maps KOs as prov:Entity, with relations tracking who captured them (wasAttributedTo), how they were derived (wasDerivedFrom), and when they become obsolete (wasInvalidatedBy).

W3C PROV-DM: The Provenance Foundation

The PROV Data Model (PROV-DM) is a W3C standard (2013) for representing provenance information on the Web. It defines a minimal vocabulary for describing how things (entities) are created, modified, or used by activities, with agents bearing responsibility.

- Entity (prov:Entity): A physical, digital, conceptual, or other thing with fixed aspects. In TacitFlow: Knowledge Objects, audio recordings, derived artifacts.

- Activity (prov:Activity): Something occurring over time that acts upon or with entities. Examples: voice capture sessions, AI structuring processes, peer review activities.

- Agent (prov:Agent): Something bearing responsibility for an activity or entity existence. Includes practitioners, AI systems, review committees.

Knowledge Object Structure

Each KO contains:

- Claim: the asserted knowledge statement

- Evidence: supporting sources and observations

- Confidence: weighted score based on evidence quality

- Provenance: full derivation chain per W3C PROV-O

- Metadata: timestamps, classification labels, contributor IDs

- Relations: typed links to other KOs in the graph

PROV-O Vocabulary

PROV-O (W3C, 2013) is the OWL representation of PROV-DM. TacitFlow serializes all KO provenance as PROV-O-compliant RDF, enabling interoperability with any PROV-aware system.

From "Sticky" to Traceable

Von Hippel (1994) identified tacit knowledge as "sticky": costly to transfer because context is lost. PROV-DM embeds context (situational metadata, derivation history) directly into the knowledge structure.

Addressing the Trust Barrier

Reluctance to share often stems from lack of attribution. PROV-DM's wasAttributedTo ensures contributors receive explicit credit, transforming sharing into a documented contribution.

Ontology (Information Science)

A formal specification of a conceptualization. Unlike a taxonomy, ontologies capture complex relationships (e.g., "an officer can supervise multiple incidents").

Taxonomy vs. Ontology

Taxonomy: Tree structure (is-a). Dog is an animal.

Ontology: Graph structure (arbitrary relations). Dog is owned-by Person.

The Provenance Chain

Creation Trail (who/when) → Derivation Trail (reasoning logic) → Access Trail (retrieval). All trails are W3C PROV-O compliant.

Audit Trail Architecture

TacitFlow maintains three audit layers ensuring full accountability and traceability for every piece of knowledge in the system.

Presenter Notes

- Provenance is Key: Emphasize that we aren't just storing text; we are storing the history of the knowledge. This builds trust.

- Standards: Mention W3C PROV-DM. This isn't a proprietary format; it's an open standard for interoperability.

- Attribution: Point out how the

wasAttributedTorelation directly solves the "why should I share?" problem by guaranteeing credit.

The EASCI Lifecycle

The Macro Loop: How knowledge evolves from experience to institutional memory

A transparent, human-led process for harvesting and turning knowledge into permanent, verifiable institutional memory as Knowledge Objects (KOs). This "Macro Loop" ensures that every successful investigation makes the entire system smarter, creating a compounding interest effect on intelligence (Irbe, 2025b).

The system adapts the EASCI framework (Experience → Articulation → Structuring → Consolidation → Innovation) to govern AI knowledge. Unlike "black-box" retraining, the system uses a transparent, human-led process to harvest and canonize new Knowledge Objects (KOs). This aligns with the Europol Programming 2025-2027 goal of "information superiority" by treating every query as a potential contribution to the canon (Europol, 2025).

Checkpoint Instrumentation

Every phase of the loop has a strict "Gate" that must be passed before the knowledge is promoted.

| Phase | Description | Artifact | Gate |

|---|---|---|---|

| Experience | Knowledge acquired through practical, situated action in real-world missions. | Raw Logs | Sensors |

| Articulation | Making hidden assumptions explicit through intuition pump scenarios. | Draft KO | Narrative |

| Structuring | Mapping reasoning to knowledge graphs with PROV-O standards. | Signed KO | PROV-O |

| Consolidation | Validating through community sensemaking and mentor verification. | Vector Index | Consensus |

| Innovation | Smart Forgetting retires stale KOs; fine-tuning reflects new reality. | Weights | Eval |

The Tacit Knowledge Gap

40% of public servants 50+ exit in 7 years. The solution applies the EASCI Framework to systematically capture this tacit knowledge.

The "Flywheel" Effect

Every round makes the system smarter. Verified KOs help with future cases, so knowledge grows faster over time.

Why "Compounding"?

Every solved case becomes a reference for the next one. The system doesn't just "process" data; it learns from it, but only through a strictly governed pipeline.

Why Gates Matter

Without strict gates, AI systems accumulate "zombie facts" - outdated or unverified information that degrades decision quality. Every promotion requires passing the gate.

Nonaka's SECI Model

Our EASCI framework is an evolution of Nonaka & Takeuchi's (1995) SECI model, adapted for AI-human hybrid teams. (Irbe, 2025b)

Smart Forgetting

Innovation requires not just learning, but unlearning. Kluge & Gronau (2018) showed that organizational knowledge must be actively pruned. "Smart Forgetting" invalidates outdated KOs to prevent "zombie facts."

Smart Forgetting & Creative Advance

Whitehead's (1929) "creative advance into novelty" reframes this: the settled past (validated KOs) becomes the platform for future innovation. The Innovation stage retires obsolete knowledge while generating new experiential context.

Complexity at the Edge

Stacey's Complex Responsive Processes (2000) and Kauffman's At Home in the Universe (1995) explain why the feedback loops produce non-linear, emergent outcomes. The compounding effect emerges from self-organizing interactions across EASCI stages.

Edge of Chaos

A metaphor from complexity science describing the boundary zone between order and randomness where complex systems exhibit maximum adaptability and creativity. Effective organizations operate at this productive boundary.

Transactive Memory System

Wegner (1995): Organizations remember collectively through a distributed cognitive network where members know "who knows what." TacitFlow operationalizes TMS by explicitly mapping expertise networks.

GDPR Compliance

GDPR Art. 17: The Innovation phase includes data retirement protocols. When KOs are superseded, the system maintains audit trails while pruning operational data per Art. 17 (Right to Erasure) requirements.

Presenter Notes

- The Macro Loop: This is the big picture. It's not just about answering one question; it's about building a brain for the organization.

- Gates: Emphasize that quality control is built-in. We don't just ingest everything.

- Smart Forgetting: This is a unique feature. Most systems just add data; we actively prune it to keep it relevant and legal.

The Integrated Reasoning Cycle (Micro Loop)

From raw retrieval to provenance-backed knowledge in seconds

While the Macro Loop evolves over months, the Micro Loop executes per query. It implements a rigorous Retrieve → Reason → Synthesize cycle ensuring every response is grounded in evidence, not model hallucination. This powers the Structuring phase of the EASCI lifecycle (Irbe, 2025b).

The 3-Step Process

The agent queries the vector store. Instead of raw text, it retrieves validated Knowledge Objects. This ensures the "facts" are grounded in the case file, not the model's training data (Lewis et al., 2020).

Using Abductive Reasoning, the agent connects the dots. Generates hypotheses using Graph-of-Thought, filtering via mutual exclusion (Bhagavatula et al., 2020; Zhao et al., 2023).

The inference is wrapped in PROV-O metadata. Who generated it? Which documents were used? Cryptographically sign the provenance chain for audit. The output is a JSON-LD Knowledge Object (W3C, 2013).

System Comparison

| Feature | Standard LLM (ChatGPT) | TacitFlow Agent |

|---|---|---|

| Memory | Ephemeral Context Window | Persistent Knowledge Graph (GraphRAG) |

| Truth Source | Training Weights (Black Box) | Retrieved Evidence (RAG) with Provenance |

| Reasoning | Probabilistic Token Prediction | Abductive Logic (Graph-Based) |

| Output | Unstructured Text | Structured JSON-LD Knowledge Objects |

| Accountability | None: "I just generate text" | Full audit trail to human sources |

Why This Matters: The Linear vs. Cyclic Gap

Standard LLMs "think" linearly. They predict the next token based on the previous one. TacitFlow forces a cyclic process where every output must be validated against the Knowledge Graph before it is accepted. This creates grounded, evidence-based responses rather than plausible-sounding hallucinations.

Micro vs Macro Loop

- Micro-Loop

- Tactical, real-time reasoning (OODA: Observe, Orient, Decide, Act).

- Macro-Loop

- Strategic knowledge governance (EASCI).

GraphRAG (Edge et al., 2024)

Connecting LLMs to knowledge graphs produces more accurate, traceable responses than document-based RAG alone. The graph structure enables reasoning over relationships, not just retrieving text chunks.

Graph-of-Thought

Structuring reasoning as a graph (not a chain) enables exploring multiple hypotheses simultaneously and selecting the best explanation through mutual exclusion (Besta et al., 2024).

Confidence Propagation

Each hypothesis in the GoT graph carries a confidence score derived from evidence quality. Pruning events update confidence in connected nodes.

Mutual Exclusion & Modus Tollens

GoT uses modus tollens ($((P \to Q) \land \neg Q) \to \neg P$) for hypothesis pruning: if a hypothesis implies a consequence that contradicts evidence, the hypothesis is eliminated. This prevents "hallucination cascades."

Complexity & Emergence

Kauffman (1995) showed complex systems self-organize at the "edge of chaos." The Micro Loop's cyclic process mirrors this: emergent intelligence arises from constrained iteration, not linear prediction.

Heuristics

Heuristics (availability, representativeness) enable fast decisions but introduce bias. TacitFlow's structured KOs provide reliable anchors to correct these biases (Kahneman, 2011).

Memory Architecture

LLMs have ephemeral context windows (128k–200k tokens). TacitFlow's Knowledge Graph persists indefinitely, transforming the assistant from a "stateless oracle" to a "learning partner."

Token Prediction vs. Reasoning

Standard LLMs predict the next token based on statistics. TacitFlow forces a different process: retrieve evidence, reason over it, then validate.

Multi-Hop Reasoning

Answers questions requiring multiple connected facts (e.g., "Who trained the officer who handled Case X?"). Vector search cannot traverse these relational chains; GraphRAG can.

Semantic vs. Keyword Search

Keyword: exact matches. Semantic: conceptual similarity ("routine patrol" $\approx$ "standard rounds"). TacitFlow combines both.

Dense Retrieval

Uses neural networks to encode queries and documents into dense vectors. Similarity is computed via cosine distance, capturing semantic relationships unlike sparse methods (BM25).

Theoretical Foundations

- Dewey: Knowledge is dynamic transformation ("situated inquiry").

- Dennett: "Intuition pumps" force externalization of tacit assumptions.

- Peirce: Abduction generates hypotheses from sparse evidence.

- Weick: Knowledge is "enacted" through social narrative.

- Whitehead: "Creative advance" - past KOs become data for new models.

Workplace Learning

Eraut (2004): Most learning is informal. The "Experience" stage captures authentic practice rather than formal documentation.

Social Knowledge

Tacit transfer depends on trust (Leonard & Sensiper). Mentor circles and verification address sharing barriers (Cabrera & Cabrera).

Presenter Notes

- The Micro Loop: This is the engine under the hood. It happens in seconds.

- GraphRAG: Explain the difference between just searching for text (Standard RAG) and searching for relationships (GraphRAG).

- Reasoning vs. Prediction: LLMs just predict the next word. This system actually "thinks" by checking facts against the graph.

- Provenance: We can trace every answer back to the source. No black box.

The Integrated Reasoning Cycle (Micro Loop)

Reasoning Engine

Interactive Visualization

Click Run Cycle to observe how the agent retrieves KOs, constructs a reasoning graph, and synthesizes a provenance-backed response.

Terminal Output

The terminal below the graph shows the raw system logs, including vector similarity scores and logical pruning events.

EASCI Simulation

Interactive demonstration of how the system captures, reasons about, and structures tacit knowledge in real-time

Integration of Theory

Integrates three theoretical components: (1) EASCI stages as macro-level knowledge lifecycle, (2) micro-loop inference cycle (Boyd, 1987), and (3) W3C PROV-O for provenance tracking.

Demo Instructions

Click "Run Simulation" to see a knowledge capture scenario. Use step controls (⏮ ⏭) for pedagogical walk-through. The visualization shows stage transitions, KO validation, and feedback loops.

Simulation Scenario

A security officer notices an anomaly during patrol. The system captures their observation, elicits reasoning through intuition pumps (indirect "what if?" questions), structures it into a KO, seeks peer validation, and updates the threat model.

Production Telemetry

TacitFlow tracks token consumption, retrieval latency (ms), confidence scores (%), and provenance node count. Three trace panels show GraphRAG data flow, GoT reasoning graphs, and PROV-O chains.

Retrieval Latency

Time from query to retrieval. Must be <200ms for voice. Optimized via ANN indexing and caching. High latency indicates graph growth or query complexity.

Confidence Scores

Based on embedding similarity, provenance completeness, peer validation, and recency. <70% triggers retrieval; <50% triggers expert review.

Real-Time Confidence

Confidence scores update live. Low confidence (<70%) triggers additional evidence retrieval. Very low confidence (<50%) flags the KO for manual expert review. This prevents premature consolidation of uncertain knowledge.

Real-Time Telemetry

The telemetry panel shows: PROV nodes (provenance chain length), KO count (Knowledge Objects referenced), Confidence % (weighted evidence score), Token count (LLM resource usage).

Production Metrics

Tracks Capture latency (time from observation to KO creation), Articulation completeness (AI probe iteration count), Validation rates (peer acceptance %), and Decay ratios (KOs archived vs. promoted).

Why Visualization Matters

Tufte (2001) emphasizes that complex processes become comprehensible when represented visually with appropriate detail. This simulation shows data at multiple levels simultaneously: individual reasoning steps (micro), stage transitions (macro), and system-wide metrics (telemetry).

Small Multiples

The three trace panels (GraphRAG, GoT, PROV-O) follow Tufte's "small multiples" principle (Tufte, 2001): identical visual structures showing different data streams allow direct comparison and pattern recognition across the inference stages.

Presenter Notes

- The Simulation: This is the "show, don't tell" moment. Run the simulation to demonstrate the system in action.

- Telemetry: Point out the live metrics. This isn't just a cartoon; it represents real system performance.

- Small Multiples: Explain how the three panels on the left show different views of the same process (Retrieval, Reasoning, Provenance).

Theory to Features

How theoretical principles dictated the UX and Backend design of a solution named TacitFlow.

Using the EASCI framework, the design created strong links between abstract theory and concrete software features. The prototype UX and backend were built specifically to operationalize these theories.

| EASCI Stage | Theoretical Basis | TacitFlow Implementation (Hypothesis Test) |

|---|---|---|

| Experience | Dewey's Context-Embedded Apprenticeship (1938) | Behavioral Sensors: Capturing "learning by doing" via access logs. Voice Capture: Record insights during authentic work; automatic context metadata. |

| Articulation | Dennett's Intuition Pumps (2013) | AI Probes: The system queries "Why did you rule out X?" to force explicit reasoning, rather than just recording statements. |

| Structuring | Peirce's Abduction (1903) | Knowledge Objects (KOs): Data structure that captures provenance and confidence, not just facts. |

| Consolidation | Weick's Sensemaking (1995) | Consensus Algorithms: RAG retrieval aggregates multiple analyst perspectives to find patterns and validate insights. |

| Innovation | Whitehead's Process (1929) | Smart Forgetting: Retire outdated KOs; feedback loop to new experience. |

From Ephemeral Expertise to Permanent Memory

Critical Distinction

TacitFlow is not a chat interface. It is a guided elicitation environment designed to trigger the cognitive mechanisms defined in the EASCI framework.

Software as Hypothesis

Leinonen et al. (2008): The prototype is a research instrument for testing theoretical assumptions, not a commercial product. Features are hypotheses about what enables tacit knowledge transfer.

Cognitive Apprenticeship

Collins et al. (1989): Modeling, coaching, scaffolding, fading. TacitFlow's AI probes function as scaffolding that "fades" as analysts internalize reasoning patterns.

The Prototype

Unlike chatbots that predict tokens, TacitFlow structures claims with evidence. Every output follows W3C PROV-O provenance standards, making reasoning auditable.

Presenter Notes

- Theory-Driven Design: Emphasize that we didn't just build a "cool app" and then look for theory. The theory (Dewey, Dennett, Peirce) dictated the features.

- The "Why" behind the "What": For example, we use "AI Probes" not because they are trendy, but because Dennett's "Intuition Pumps" suggest we need to provoke thinking to get at tacit knowledge.

- Research Instrument: Remind the audience that this software is a hypothesis test. If it fails to capture knowledge, that is a valid research finding about the theory.

Data Context & Concepts: Foundations of the TacitFlow Architecture

TacitFlow is a knowledge engine built to transform raw data into structured, verifiable, and legally admissible intelligence.

1. Knowledge Objects (KOs)

The atomic unit of the system. A KO is a structured, verifiable claim (JSON-LD) containing:

- the insight or hypothesis

- source evidence (provenance)

- confidence level

- author attribution

KOs are designed to be machine-readable and interoperable with W3C PROV-O standards, ensuring legal chain-of-custody. This differentiates TacitFlow from systems operating on statistical prediction.

2. Tacit Knowledge

Tacit knowledge is unwritten intuition formed through experience. As Michael Polanyi (1966) stated: "We know more than we can tell."

TacitFlow captures these fleeting judgments through Context-Embedded Apprenticeship and guided articulation during live work, converting intuition into explicit, searchable KOs before expertise is lost.

3. Grounded Reasoning (RAG)

TacitFlow forbids AI from inventing facts. Retrieval-Augmented Generation (RAG) ensures:

- all answers are grounded in verified KOs

- every output has a traceable lineage

- no hallucinated content enters casework

This "Groundedness" is essential for evidentiary standards. Legal and intelligence contexts cannot tolerate hallucinations.

KO Example (Simplified JSON-LD)

{

"@context": "https://w3id.org/ko/v1",

"@type": "KnowledgeObject",

"id": "ko:uuid-...",

"claim": "Suspect A linked to Van B",

"evidence": ["ev:log-001", "ev:cam-02"],

"confidence": 0.85,

"author": "agent:lepik"

}

KOs encode claims with evidence following W3C PROV-O for legal chain-of-custody.

Signal-to-Noise Improvement

Transforming unmanageable raw data into actionable intelligence:

- Raw Intake

- Petabytes

- Processed Data

- Terabytes

- Knowledge Objects

- Kilobytes

Source: Law Enforcement Common Challenges (2024)

Data Classification Standards

TacitFlow adheres to operational security levels, reinforcing why deployments must be offline, on-prem, and air-gapped:

- EU/NATO RESTRICTED: On-prem operational data.

- EU/NATO CONFIDENTIAL: Air-gapped investigation enclaves.

- EU/NATO SECRET: Strictly sealed, no egress.

Key References

KO Registry: Schema based on W3C PROV-O.

Polanyi (1966): The Tacit Dimension.

Lewis et al. (2020): Retrieval-Augmented Generation (NeurIPS).

Presenter Notes

- The "Atomic Unit": Explain that KOs are the currency of the system. The system does not trade in "documents" or "chats," it trades in verified claims.

- Signal-to-Noise: Use the side note to emphasize the massive reduction in cognitive load. Our approach turns petabytes of noise into kilobytes of truth.